Alloy / Label Value Organization & Testing / 2019

Evaluating Data Catalog Label Values to Improve Taxonomy and Encourage Functional Usage

Overview

The client’s Platform Catalog team desired a refinement of the label values that users could pull to tag data with in their data management system. I led research and testing to identify key issues behind low label tagging behavior, understand the ambiguity of the labels, and assess the overall lack of organization within the system. Leveraging these insights, I developed a plan for improved data labels and introduced categorization, focusing on clarity, relevance, and enhanced usability for data organization. My recommendations included both immediate and long-term action items, all prioritized to best address user needs, which was enthusiastically received by the client and won us a contract extension for continued investigation. Ultimately, my research findings led to content and information architecture changes within the client's platform, demonstrating the tangible impact.

Role

Product Designer, Research

Evaluative Research

Usability Testing

Information Architecture

Data Synthesis

Team

Product Designer, Design

Project Manager

Year

2019

Summary

01.

Challenge

The client, a global accounting and consulting firm, partnered with our agency to optimize user engagement as they worked on revamping their data management system. Initial data revealed a critical issue: minimal utilization of both tagging and search functionalities. Furthermore, when users did tag data sets, they often applied inaccurate labels. This resulted in poor search accuracy and a lack of overall data organization within the system. My team focused on supporting the data catalog team understand the root causes behind these challenges, in order to best address the stated problem.

Opportunity

How might we improve the organization and searchability of information within the data system, allowing users across tax and audit divisions to find and analyze data more efficiently for their daily tasks?

Approach

Research Activities:

Open and Closed Card Sorting: We facilitated card sorting exercises with users to understand how they categorized data and perceived the current label options.

User Testing: We conducted user testing to observe in-system behavior and evaluate the clarity and effectiveness of the label list.

Research Goals:

Evaluate Label Viability: Assess the effectiveness of the existing label list in supporting user tagging behavior.

Uncover User Mental Models: Identify the factors and considerations that influence user decisions when assigning labels to data assets.

Refine Label System: Develop recommendations to improve the label list for enhanced discoverability and user tagging accuracy.

Impact

Enhanced Partnership: The project fostered a stronger partnership between Alloy's product team and the client's data management team. This collaboration aimed to improve the platform's search function, directly addressing user frustrations identified in the research.

Avoided Costly Mistakes: By validating that label tagging was valued and desired by users, we proved the need for this feature and that optimizing it rather than retiring it was the right step. We also steered leadership away from potential development costs associated with rehauling the search engine without building out labels that would resonate with users.

Users for Improvement: User participation in our label testing was positive and appreciated. Users actively volunteered to consult on further label list expansion, eager to contribute their unique needs, citing niche use cases, to the process. They also connected us to other opportune individuals, supporting our recruitment process and getting us buy-in.

Research

02.

Label Validation Exploration

Goals

Identify trends in list classification

Identify pain points or content gaps related to Line of Service (LoS)

Address recommendations for next steps regarding list creation and user needs

Selected Hypotheses

Common groupings of labels will emerge that align across the LoS and level of experience of users

Users will advocate for and/or prefer the option to create & add their own labels

User Testing Exercises

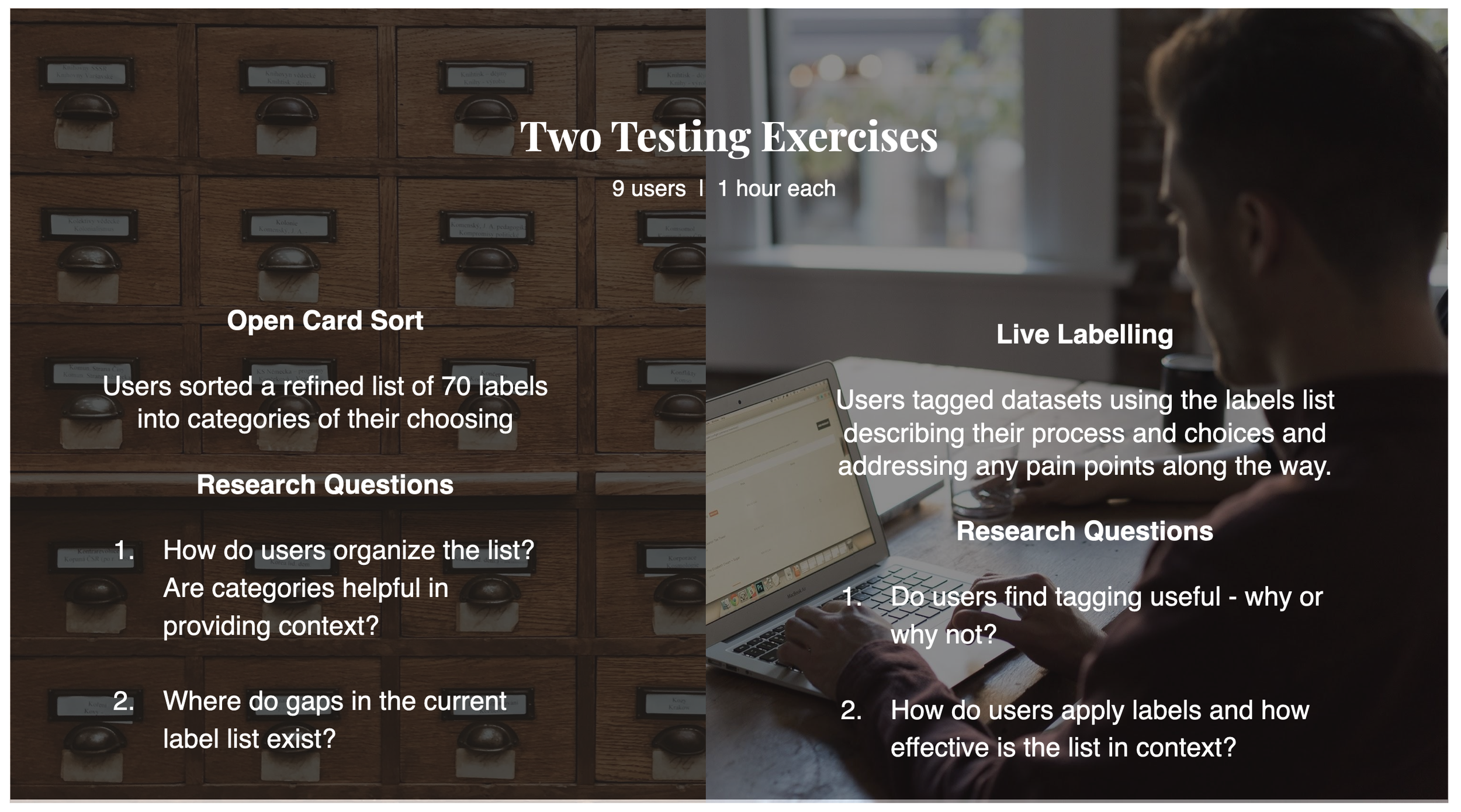

Our user participant criteria was primarily focused on three specific types of Lines of Service (LoS) across tax and audit divisions, and comprised of both junior and senior members of the firm. We conducted 60 minute testing sessions with a total of 9 users.

The first exercise, a Card Sort, was 2-part activity: the first stage was a committed guidance sort, followed by a no-guided sort. Users were first tasked with sorting a refined list of 70 active labels into categories of their choosing. The no-guidance stage of the exercise was an opportunity to learn how LoS and level of experience factor into the category delineations of existing labels generated from first stage Open Card Sort.

The last exercise was an interactive test where users were prompted to self-generate labels, which would be pulled from the full list of labels. This “live label” test was conducted through an interactive prototype built in-house.

Findings

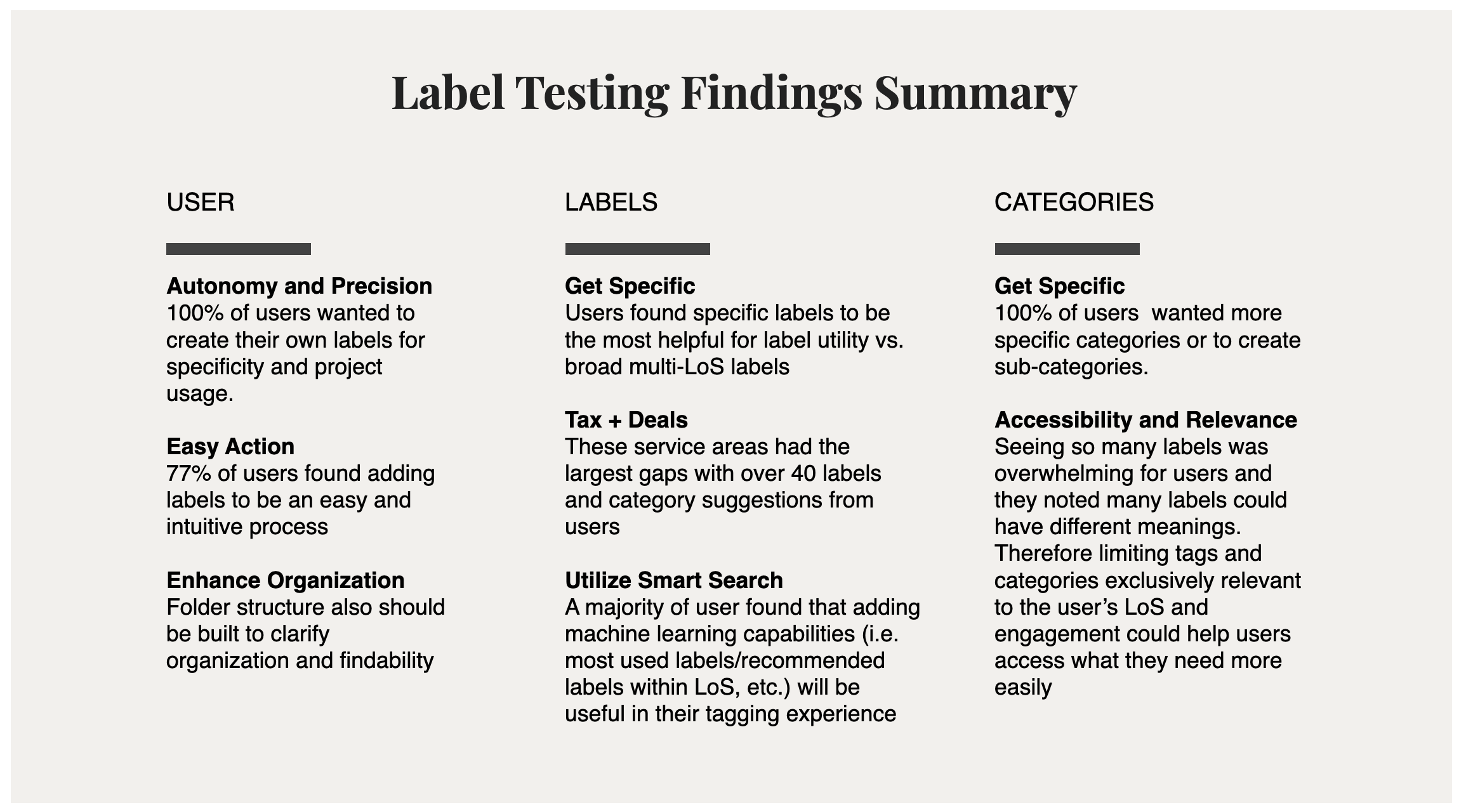

Our user research and testing proved that users found the data tagging feature helpful. We validated that users did desire and value the functionality; they just were not using it. This communicated to my team that the problem was not inherent to the feature, but rooted in the labels themselves: the quantity of labels, the relevancy of the available labels, the lack of label categorization and definition, and more.

What we learned

77% of users found tagging helpful and said they would use tags

77% of users wanted to apply 3-5 tags per asset minimum

44% of users found the labels lacking key elements in their LoS or did not find the list useful for their LoS

10% of users wanted infinite number of tags to select from and apply

What we find opportune

Users saw the value in using labels, but the way labels were applied to overlapping categories created confusion and made it difficult for them to effectively use the system.

ALL users wanted more granularity in the labels

ALL users confirmed that categories would aid in their search and labelling process

What we recommend

Clarity: Broad terms should be used as categories; for labels, the more specific the better

Findability: Potentially have views based on LoS/Engagement with View All option

Usable: Utilize ML for suggestions and backend filtering

Learnable: Consider Color-coded tags by category to help define broader or cross-over terms

Conclusion

03.

Impact

The user research findings and recommendations from our work were highly valued by the client. This resulted in several positive outcomes:

Expanded Investigation Approval: Our initial research sparked client interest, leading to approval for further investigation of user tagging behavior. This allowed us to develop a deeper understanding of user needs and begin to test our recommendations before development builds.

Enhanced Partnership: The project fostered a stronger partnership between Alloy's product team and the client's data management team. This collaboration aimed to improve the platform's search function, directly addressing user frustrations identified in the research.

Actionable Recommendations: Client responsiveness translated into concrete actions. The client assigned a dedicated data librarian to work with our team on expanding the label list based on our findings. Additionally, they planned an internal audit of their machine learning capabilities, potentially paving the way for further improvement based on user data.

Next Steps

To ensure a smooth transition for the expanded effort, I undertook the following steps:

Knowledge Transfer: I prepared a comprehensive documentation brief for the Alloy product team members assuming responsibility for the next phase. This document covered the full scope of research and testing conducted, along with our backlog and recommendations for moving forward.

Project Handover: With the documentation in place, I effectively transitioned ownership of the project to the new team members within Alloy. This ensured continuity and facilitated a successful continuation of the research initiative.